1 INTRODUCTION

I recently needed to recommend a tool to use for a scalability testing

project, and I was in the fortunate situation of having some time to survey the field,

and to look into the top contenders in greater depth. From an

original list of over 40 candidates, I selected three finalists in the open-source and commercial categories.

I then took some time to look at

them in detail, to determine which tool to recommend for the ongoing

scale testing effort. Since I have seen several questions about how

these tools compare to each other on various mailing lists, I'm sharing

my findings here in the hopes that others will find them useful.

My three finalists were Load Runner, from Mercury Interactive;

JMeter, from the Apache foundation, and The Grinder, an open-source

project hosted on SourceForge.

2 SUMMARY OF

RESULTS

I

found that I could use any of them and get a reasonably good amount

of scale test coverage. Each tool has unique things it does very

well, so in that sense, there is no “wrong answer.” Conversely,

each of the tools I considered have unique deficiencies that will

impede or block one or more of the scenarios in our test plan. So

there is no “right answer” either – any option selected will be

something of a trade-off.

Based on this research, I recommended The Grinder as the tool to go

forward with. It has a simple, clean UI that clearly shows what is

going on without trying to do too much, and offers great power and

simplicity with its unique Jython-based scripting approach. Jython

allows complex scripts to be developed much more rapidly than in more

formal languages like Java, yet it can access any Java library or

class easily, allowing us to re-use elements of our existing work.

Mercury's Load Runner had a largely attractive feature set, but I

ultimately disqualified it due to shortcomings in these make-or-break

areas:

Very high price to license the software.

Generating unlimited load is not permitted. With the amount

of load our license allows, I will be unable to effectively test important

clustered server configurations, as well as many of our “surge”

scenarios.

Very weak server monitoring for Solaris environments. No

support for monitoring Solaris 10.

JMeter was initially seen as an attractive contender, with its

easy, UI-based script development, as well as script management and

deployment features. It's UI is feature-rich and this product has

the Apache branding. It was ultimately brought down by the bugginess

of it's UI though, as several of it's key monitors gave incorrect

information or simply didn't work at all.

3 Comparison

Tables

All the items in the tables below are discussed in greater detail

in the following sections. These tables are to give a quick overview

3.1 Critical Items

There are several features that are key to any scale testing

effort. Items in this table are key to our efforts. Not having any

of these will seriously impact our ability to generate complete scale

test coverage.

-

* Multiple workarounds are being investigated, including

calling native (libcurl) code for the most intensive downloads.

3.2 Non-Critical Items

Items in this section are not make-or-break to our test effort,

but will go a long way to making the test effort more effective.

3.2.1 General

-

3.2.2 Agents

-

3.2.3 Controller

-

4 GENERAL

4.1 Server Monitoring -- Windows, Solaris, etc.

4.1.1 Load Runner

Mercury is extremely strong in this area for Windows testing.

Unfortunately, it is very weak in unix/Solaris. For windows hosts,

Load Runner uses the native performance counters available in

perfmon. This allows monitoring myriad information from the OS, as

well as metrics from individual applications (such as IIS) that make

their information available to perfmon.

For Solaris hosts, Load Runner is restricted to the performance

counters available via rpc.rstatd. This means some very basic

information on CPU and memory use, but not much else. Note

that Load Runner does not currently support any kind of performance

monitoring on Solaris 10.

4.1.2 JMeter

JMeter has no monitoring built in. Thus, wrapper scripts are

required to synchronize test data with external perf monitoring data.

This is the approach I used to great effect with our previous test

harness. The advantage of this method is I can monitor (and graph!)

any information the OS makes available to us. Since the amount of

data to us is quite large, this is a powerful technique.

4.1.3 The Grinder

The same wrapper-based approach would be required here as I

detailed above for JMeter.

4.2 Can generate unlimited load

This is a make-or-break item. There are many scenarios I just

can't cover if I can only open a few thousand socket connections to

the server.

4.2.1 Load Runner

Load runner restricts the number of vusers you can run. Even

large amounts of money only allow a licence for a modest number of

users. Historically, the rate for 10,000 HTTP vusers has been

$250,000. However, on a per agent basis, load is generated very

efficiently so it may take less hardware to generate the same amount

of load. (But for the money you spend on the load runner license,

you could buy a LOT of load generation hardware!)

4.2.2 JMeter

Since this is Free/Open Source, you may run as many agents as you

have hardware to put them on. You can add more and more load

virtually forever, as long as you have more hardware to run

additional agents on. However, in specific unicast scenarios, such

as repeatedly downloading very large files (like PIPEDSCHEDULE), the

ability of agents to generate load falls off abruptly due to memory

issues.

4.2.3 The Grinder

In this matter the Grinder's story is the same as JMeter. The

limit is only the number of Agents. The Grinder suffers the same

lack of ability to effectively download large files as JMeter. A

workaround that uses native code (libcurl) to send requests is being

investigated.

4.3

Can run in batch (non-interactive) mode

4.3.1 Load Runner

No. Hands-free runs can be scheduled with the scheduler, but

multiple specific scenarios cannot be launched from the command line.

This may be adequate for single tests; it's not clear how this would

work if a series of automated tests was desired.

4.3.2 JMeter

Yes, the ability to do this is supported out of the box. However,

it can only be run from a single agent; the distributed testing

mechanism requires the UI. So for automated nightly benchmarks it

may be ok, but for push-to-failure testing where much load is

required, the UI is needed. It would presumably be possible to have

a wrapper script launch JMeter in batch mode at the same time on

multiple agents. This would achieve arbitrary levels of load, but

would not have valid data for collective statistics like total

transactions per second, total transactions, etc.

4.3.3 The Grinder

As with JMeter, a single agent can be run from the command line.

See JMeter comments, above.

4.4 Ease of Use

4.4.1 Load Runner

4.4.1.1 Installation

Installation takes a ton of time, a lot of disk space, and a very

specific version of Windows. But it's as simple as running a windows

installer, followed by 3 or 4 product updaters.

4.4.1.2 Setting up Simple tests

For HTTP tests, Load Runner is strong in this category, with it's

browser recorder and icon-based scripts.

4.4.1.3 Running Tests

The UI of the controller is complex and a bit daunting. There is

great power in the UI if you can find it.

4.4.2 JMeter

4.4.2.1 Installation

Be sure Sun's JRE is installed. Unpack the tar file. Simple.

4.4.2.2 Setting up Simple tests

Very quick. Start up the console, a few clicks of the mouse, and

you are ready to generate load. Add thread group, add a sampler, and

you have the basics. Throw in an assertion or two on your sampler to

validate server responses.

4.4.2.3 Running Tests

Both distributed and local tests can be started form the UI. A

menu shows the available agents, and grays out the ones that are

already busy. Standalone tests can be started from the command line.

JMeter wins this category hands down.

4.4.3 The Grinder

4.4.3.1 Installation

Installation is as simple as installing java, and unpacking a tar

file.

4.4.3.2 Setting up Simple tests

Setting up tests, even simple tests, requires writing Jython code.

So developer experience is important. A proxy script recorder is

included to simplify this. In addition, there are many useful

example scripts included to help you get started.

4.4.3.3 Running Tests

Involves configuring a Grinder.properties file, manually starting

an agent process, manually starting the console, then telling the

test to run from within the console UI.

4.5

Results Reporting

4.5.1 Auto-generated?

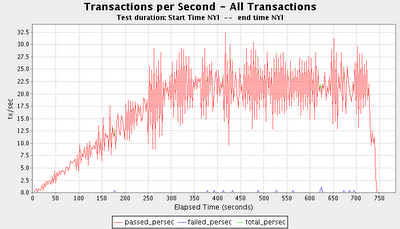

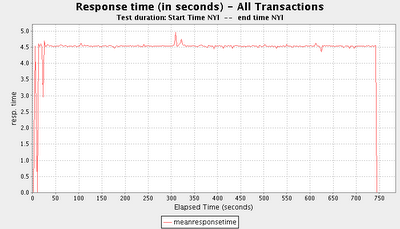

Having key graphs generated at the conclusion of a scale run, such

as load over time, server CPU, transactions per second, etc, can save

a lot of tedium, since manually generating these graphs from log

files is quite time consuming.

4.5.1.1 Load Runner

Load runner has an excellent integrated analysis tool that can

dynamically generate graphs on any of the myriad performance counters

available to it. The downfall of this approach is that there are

only a small number of performance metrics it can gather on Solaris.

And while I can gather additional server metrics using sar, vmstat,

dtrace, iostat, mpstat, etc., integrating this information in to the

load runner framework will be difficult at best.

4.5.1.2 JMeter

JMeter does not gather any server-side performance metrics.

But it can generate a limited number of client-side graphs while the

test is in progress. These graphs can be saved after the tes is

over. Fortunately, all the test data is written in a standard

format. So it probably makes more sense to generate all the desired

graphs via shell scripts during post-processing. This is the same

approach I used with our previous test harness.

4.5.1.3 The Grinder

Like with JMeter, there are no graphs generated out of the box,

but with the standard-format log files, scripted post-production is

reasonably straightforward, giving us a powerful and flexible view on

the results.

4.5.2 Analysis tools?

4.5.2.1 Load Runner

Yes very powerful tool for doing analysis after a run. An

infinite number of customized graphs can be generated. These graphs

can be exported into an html report.

4.5.2.2 JMeter

Nothing included. I would want to transfer over some of the

scripts used in our previous test harness, or write a simple tool

that dumps test data into a DB for post-analysis.

4.5.2.3 The Grinder

Nothing included. See the JMeter comments, above.

4.6 Simplicity of Agent management

4.6.1 Load Runner

This works well in Load Runner; each agent can run as a service or

an application, simplifying management. Test scripts are

auto-deployed to agents.

4.6.2 JMeter

JMeter is good here. Each agent is a server that the controller

can connect to at will in real-time. Test scripts are automatically

sent to each agent, centralizing management.

4.6.3 The Grinder

Grinder is the weakest here. The properties files that define how

much load to apply, must be manually deployed to all agents. A

wrapper shell script like the one used by our previous test harness

could address this by always deploying the Jython scripts to the

agents before each run.

4.7 Tool is cross-platform

4.7.1 Load Runner

Not really. A subset of the complete agent functionality can be

had for agents running on Linux or Solaris. Non-windows agents run

each vuser as a process rather than a thread, reducing the amount of

load an agent can produce. The controller and VUGen both are

Windows-only. And Load Runner is poor at measuring non-Windows

server statistics.

4.7.2 JMeter

Yes. Java/Swing app is platform-agnostic.

4.7.3 The Grinder

Yes. This app is based on Java, Swing, and Jython. Like JMeter,

it will run anywhere you can set up a JVM.

4.8 Cost

4.8.1 Load Runner

Expect to pay in the low to mid six-figures for a license allowing

any kind of robust load-generation capacity. But that's not all,

there are high ongoing support costs as well. For the same kind of

money I could get over 100 powerful machines to use as scale agents,

as well as associated network switches, cabling, etc.

4.8.2 JMeter

Free. (Apache License)

4.8.3 The Grinder

Free. (Grinder License)

4.9 Intended audience/technical level

4.9.1 Load Runner

Load Runner has the widest audience of all these tools; perhaps

not surprising given its maturity as a commercial product. It's

browser-recording and icon-based script development give it the

lowest technical barriers to entry of any of the three products. A

QA engineer with modest technical background and little to no coding

skills can still be productive with tool. And it's ability to load

Windows .dll's and other libraries give it a power and flexibility

useful to developers and other more advanced users.

4.9.2 JMeter

JMeter does not require developer skills to perform basic tests in

any of the protocols it support out of the box. A form-driven UI

allows the user to design their own scenario. This scenario is then

auto-deployed to all agents during test initialization.

4.9.3 The Grinder

While it's possible that a regular QA engineer could be used to

run the console and perform some testing, the tool is really more

aimed at developers. This is the only tool of the three that did not

include any kind of icon-based or UI-based script development. At a

minimum, users will need to know how to write Python/Jython code to

create simple test scripts, and the ability to write custom Java

classes may be required as well, depending on the scenario.

4.10

Stability/Bugginess

4.10.1 Load Runner

The controller crashes occasionally under heavy load, but this is

infrequent and largely manageable. Other than this, the product

seems robust enough.

4.10.2 JMeter

JMeter fares poorly in this area.

TODO

4.10.3 The Grinder

I found no issues with the Grinder, other than the

previously-mentioned memory

issue with large file downloads.

5 AGENTS

5.1 Power of

transactions

5.1.1 how flexible on what can be passed/failed?

5.1.1.1 Load Runner

Any arbitrary criteria can be set to define if a transaction

passes. This includes but is not limited to response time, contents

of response body, response code, or just about anything else.

5.1.1.2 JMeter

In JMeter, samplers generate your test requests. You can add a

wide variety of assertion types to any of your samplers. These will

allow you assert on response code, match regular expressions against

the response body, assert on the size or md5sum of the response.

5.1.1.3 The Grinder

As with Load Runner, pass/fail criteria has merely to be defined

within the test script. Criteria can be whatever you want.

5.1.2 user-defined transaction/statistic types?

5.1.2.1 Load Runner

Yes – if you get away from the icon-based view in Vugen and go

to the code level, you can wrap anything you want in a transaction to

get timing information, pass/fail data, etc.

5.1.2.2 JMeter

Yes – done through plugins.

5.1.2.3 The Grinder

Yes – an API exists to easily wrap any Java or Jython method in

a transaction.

5.2

Other Protocols

5.2.1 Which protocols are supported out of the box?

5.2.1.1 Load Runner

This varies by the type of license purchased, with each protocol

having a separate cost and a separate limit for the number of

allowable VUsers. The potential number of protocols is extremely

high, including Java, ODBC, FTP, HTTP, and others.

5.2.1.2 JMeter

Supports several protocols out of the box:

5.2.1.3 The Grinder

The Grinder only supports HTTP out of the box.

5.2.2 Can transactions wrap custom (non-http) protocols? Can

transactions wrap multiple (http or other) requests to the server?

5.2.2.1 Load Runner

Yes. There are multiple ways to do this. You can implement your

own protocol handler in a .dll or in Load Runner's pseudo-c. Then

you can invoke this handler from any type of VUser that you have a

license for. Alternately, unless your protocol is something

uncommon, you can probably buy a pre-existing implementation of your

protocol, and licenses for VUsers to run this protocol.

5.2.2.2 JMeter

Yes. An external Java plugin that supports your protocol must be

added in to JMeter to support this.

5.2.2.3 The Grinder

Any protocol can be tested with the Grinder. An HTTP plugin is

included. In other cases, you will create a separate Java class that

implements a handler for your protocol. In your test script, you

will wrap this Java class in a Grinder test object. Your protocol is

used/invoked by calling any method you want from your java class via

the test wrapper. The wrapper will pass/fail the transaction based

on response time.

This default behavior can be overridden with additional code in

your Jython script. For example, after invoking your protocol

method, you could inspect the state of your Java object and pass/fail

the transaction based on information there.

5.3 Capacity of single agent to generate load, particularly in

high-bandwidth scenarios

I have typically seen libraries like Apache's HTTPClient max out

the CPU to 100% when it's conducting high-bandwidth, large file

downloads. The library supports high bandwidth use and many

transactions per second just fine, but has issues with repeated large

file downloads.

5.3.1 Load Runner

Per-agent load generation capacity is strong. Licensing

constraints may limit actual load generated.

5.3.2 JMeter

With the exception of the high-bandwidth case, per agent capacity

is good.

5.3.3 The Grinder

Runs out of memory when repeatedly downloading large documents in

many threads. Currently, there does not seem to be a workaround

inside The Grinder itself. However, with my previous test harness I

was able to work around this same issue by calling native code, and

there is reason to believe that approach may work here as well.

5.4 Can support IP spoofing

Assuming a large range of valid IP addresses assigned to the agent

machines, does the test harness support binding outgoing requests to

arbitrary IP addresses? The ability to support this is critical for

out test effort. If all broker requests come in from the same IP

address, the broker thrashes unrealistically as it continually

updates customer settings.

5.4.1 Load Runner

Yes. (see link in appendix 1)

5.4.2 JMeter

JMeter is weak here. There is a new mechanism (not yet released

but available in nightly builds) where outbound requests can

round-robin on a predetermined list of local IPs. This is not good

enough for Fat Client simulation.

5.4.3 The Grinder

The local IP address to bind the outbound request to can be

specified in the Jython scripts. This is just what I need.

5.5 Can support variable connection speed/bandwidth throttling

5.5.1 Load Runner

Load Runner supports this out of the box.

5.5.2 JMeter

JMeter does not support this out of the box, but there is a slow

socket implementation in the wild, written for the Apache HTTP Client

(which JMeter uses), that should be possible to drop in fairly

easily.

5.5.3 The Grinder

The Grinder does not support this. It may be possible with

additional code hacking, but the path for this is not clear. Their

third-party HTTP implementation means writing a custom solution may

be challenging. Perhaps it would be possible using JNI and libCurl,

although the author of the libCurl binding suggest there may be a

memory leak in the C layer.

5.6 Can run arbitrary logic and external libraries within agent

5.6.1 Load Runner

Windows .dll's may be loaded. Home-made libraries written in Load

Runner's pseudo-C libs work fine as well. Additionally, function

libraries can be embedded directly in the virtual user script.

5.6.2 JMeter

External Java libraries can be accessed via the plugin

architecture.

5.6.3 The Grinder

The Grinder offers lots of flexibility for loading and executing

third party libraries. With Jython, any Java code may be called, and

most python code may be run unchanged. And there is a decent

collection of example scripts that comes with the Grinder

distribution.

5.7 Scheduling

5.7.1 Load Runner

Load Runner has a powerful, UI-based scheduling tool which allows

you great flexibility to schedule arbitrary amounts of load over

time. Load can be incrementally stepped up and stepped down, by

single threads or entire groups. There is a graphical schedule

builder that can generate schedules of arbitrary complexity.

5.7.2 JMeter

JMeter has UI-based scheduling that allows per-thread startup

delays, as well as runs that start in the future. JMeter tests can

run forever, for a specified time interval, or for a specified number

of iterations for each thread.

5.7.3 The Grinder

No per-thread ramp-in. No generic scheduling tool. Primitive

per-process (instead of per-thread) scheduling is possible but use of

this feature probably reduces an Agent's maximum load-generation

capacity, as the overhead of running a new process is far greater

than the overhead of creating a new thread.

6 CONTROLLER

6.1 Ability of Controller to handle high volume of agent data

6.1.1 Load Runner

Load runner probably handles as much or more real-time data as any

product out there. But they do it effectively. If you give the

controller a beefy box to run on, you should have no problems.

6.1.2 JMeter

Limited. The amount of transaction monitors you can have running

is configurable. If more that one or two are going and the agents

are producing a lot of transaction data, the UI takes all the CPU,

bogs down and becomes unusable.

6.1.3 The Grinder

The grinder does very well here, probably better than Load Runner.

By design, the agents only send a limited amount of real-time data

back to the controller during a test run. And the sampling period is

adjustable with a big friendly slider. This is a handy feature I

didn't fully appreciate at first – if the network bandwidth numbers

are updating too fast, it's hard to see how many digits are in the

number before it updates again. But with the slider, you can lock

that number down for enough time to really consider it.

6.2 Real-Time Monitoring (Controller)

6.2.1 Load Runner

Load Runner features very strong real-time monitoring in the

controller. Client side graphs, such as total transactions per

second, errors per second, can be displayed next to server side

graphs like CPU use and disk activity. The user can drag and drop

from a list of dozens of graph types.

6.2.2 JMeter

Basic, table-based monitoring similar to what is in our previous

test harness works properly. Other monitors threw null pointer

exceptions.

6.2.3 The Grinder

The Grinder is good here. It has simple, sliding performance

graphs for all transactions in one tab. These graphs are similar to

what you see in the Windows Task Manager, where performance metrics

older than a given amount of time slide off the left side of the

graph. In addition, as in our previous test harness or JMeter, there

is numeric data that periodically updates in a table.

6.3 Real-Time Load Adjustment

Sometimes while a test is in progress, you want to make

adjustments. Increase the load. Decrease the load. Bring another

agent online.

6.3.1 Load Runner

Load Runner wrote the book on this topic, with its highly-flexible

ability to start and stop load in the middle of a test, with

individual agents, groups of agents, or the entire set of agents.

6.3.2 JMeter

JMeter has the ability to interactively start and stop load on an

agent-by-agent basis. It cannot interactively be done at the

per-thread level, but agents and thread groups can have schedulers

assigned to them.

6.3.3 The Grinder

The Grinder console does not have the ability to dynamically

adjust the levels of load being generated by the agents. Coupled

with its lack of a scheduler, this makes the Grinder the least

flexible of the three tools when it comes to interactively setting

load levels.

6.4 Controller-side script management/deployment

6.4.1 Load Runner

Yes.

6.4.2 JMeter

Yes.

6.4.3 The Grinder

Yes.

6.5 Can write simple scripts in the UI?

6.5.1 Load Runner

Load Runner comes with a powerful script-development tool, VUGen.

This gives the test developer the option of developing icon-based

test scripts, as well as the traditional code-view development

environment. In addition. Load Runner can record web browser

sessions to auto-generate scripts based on the recorded actions.

6.5.2 JMeter

Scripts are based on XML. They can be written in your preferred

text editor, or created in an icon-based UI in the controller window. I found this feature to be both easy to use and surprisingly flexible.

There is also a recorder feature to let you interactively create

your scripts.

6.5.3 The Grinder

The Grinder is the weakest

of the three here. It does have a TCP Proxy feature that can

record browser sessions into Jython scripts. But there is no

integrated graphical environment for script development

7 CONCLUSION

I selected The Grinder due to several make-or-break issues.

However, each tool has unique strengths and weaknesses. Which tool

is ultimately best for you depends on a number of things, such as:

Does you budget allow for an expenditure ranging from several

tens to hundreds of thousands of dollars?

Will you be testing in a windows-only environment?

What is the technical level of your scale testers?

Both of the open source projects have merits, but

neither one is ideal. My approach will be to work with the

Grinder development team to resolve the most serious offenders.

8 Appendix 1 – Additional information

Load Runner system requirements (controller must be on Windows!)

http://www.mercury.com/us/products/performance-center/loadrunner/requirements.html

Linux/Solaris server monitoring (weak)

http://www.mercury.com/us/products/performance-center/loadrunner/monitors/unix.html

JMeter home page

http://jakarta.apache.org/jmeter/

JMeter Manual

http://jakarta.apache.org/jmeter/usermanual/index.html

The Grinder home page

http://grinder.sourceforge.net/

The Grinder Manual

http://grinder.sourceforge.net/g3/getting-started.html

Windows IP address multi homing

http://support.microsoft.com/kb/q149426/

9 Appendix 2 – Distinguishing features

These are some of the distinguishing features of each product:

Cool with Load Runner

highly developed, mature product

strong support

It is complex, but feature-rich

Problems w/ Load Runner

Extreme cost, both up front and ongoing

Limited load generation capacity based on license/key.

Limited ability to monitor server stats outside of windows.

Cool w/ Grinder

Jython scripting means rapid script development

Jython simplifies coding complex tasks

Good real-time feedback in the UI in most tabs.

Sockets based agent/controller communications. Trouble-free

in our testing.

Problems w/ Grinder:

(Since this original article was posted, many of these issues have been addressed. See the blog entry titled

"The Grinder: Addressing the Warts.")

Cool w/ JMeter:

- Less technical expertise required

- Overall more “slick” or “polished” feel –

availability of startup scripts, more utility in the UI.

Problems w/ JMeter:

- Limited feedback in the UI when the test is running

- Memory and CPU issues when downloading very large files

- The UI is buggy. Big pieces, including monitors, just don't

work. Many Null Pointer Exceptions in the log, etc.